AI Intelligence Memory Performance Debate: Zep Mem0 Benchmarks in Doubt

A recent announcement in the field of AI Intelligent Body Memory has sparked a lot of interest in the industry.Mem0 Company publishes research reports,宣称其产品在 AI 智能体记忆技术方面达到了行业领先(SOTA)水平,并在特定基准测试中超越了包括 Zep 在内的竞争对手。然而,这一说法迅速遭到了 Zep 团队的质疑。Zep 指出,在正确实施的情况下,其产品在 Mem0 选用的 LoCoMo 基准测试中,性能实际上要高出 Mem0 大约 24%。这一显著差异,促使人们对基准测试的公正性、实验设计的严谨性以及最终结论的可靠性进行更深入的探究。

In the highly competitive arena of Artificial Intelligence, earning the SOTA (State-of-the-Art) designation means a lot to any company. It not only means technological leadership, but also attracts investment, talent and market attention. Therefore, any statement about SOTA, especially conclusions reached through benchmarking, should be scrutinized.

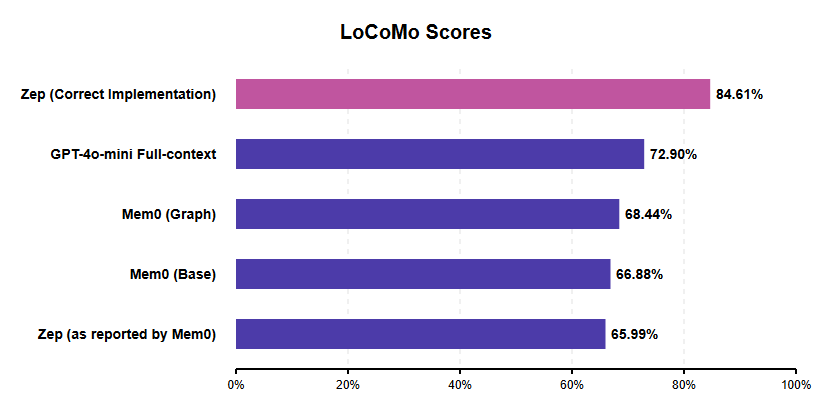

Zep Claim: LoCoMo Test Results Reversed With Proper Implementation

In its response, the Zep team noted that when the LoCoMo experiment was configured in accordance with its product's best practices, the results were very different from the Mem0 report.

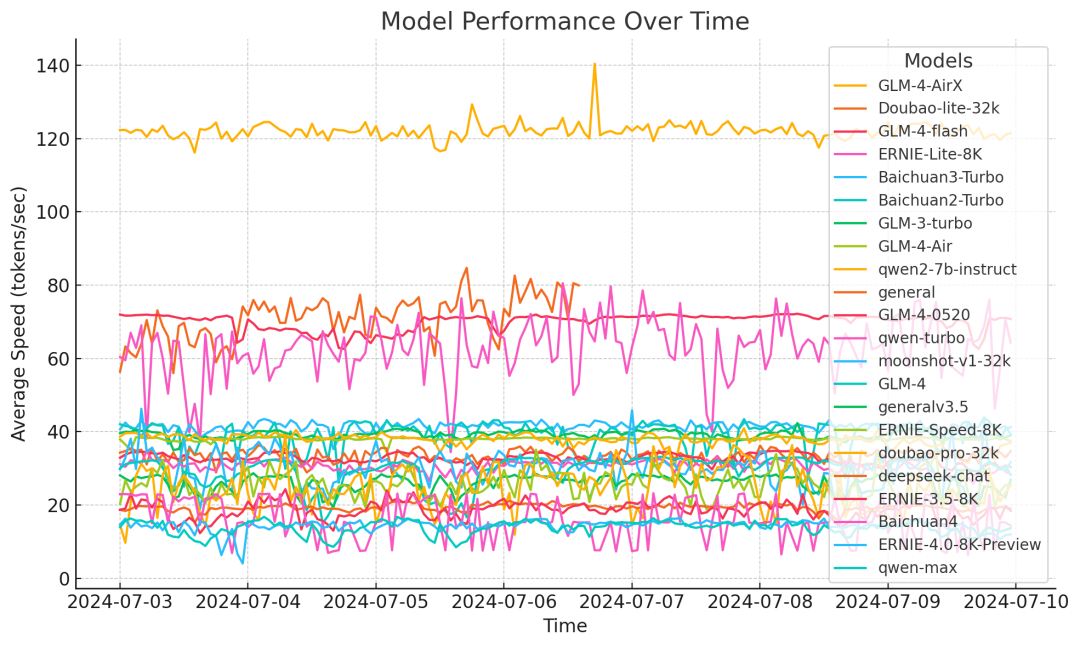

All scores are from Mem0 reports except for "Zep (Correct)" discussed here.

According to Zep's published evaluation, its product J-score reached 84.61%In comparison to the Mem0 optimal configuration (Mem0 Graph) of about 68.41 TP3T, it achieves about 23.6% of relative performance gains. This contrasts with Zep's score of 65.99% reported in the Mem0 paper, which Zep suggests is likely to be a direct result of an implementation error as will be discussed below.

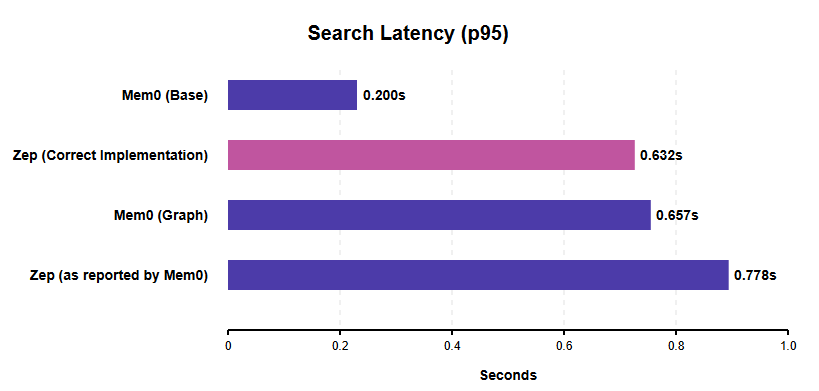

In terms of **search latency (p95 search latency)**, Zep notes that when his system is properly configured for concurrent searches, the p95 search latency is 0.632 seconds. This is better than Zep's 0.778 seconds in the Mem0 report (which Zep speculates is inflated by its sequential search implementation) and slightly faster than Mem0's graph search latency (0.657 seconds).

All scores are from Mem0 reports except for "Zep (Correct)" discussed here.

It is worth noting that Mem0's base configuration (Mem0 Base) shows lower search latency (0.200 seconds). However, this is not an entirely equivalent comparison, as Mem0 Base uses a simpler vector store/cache that does not have the relational capabilities of a graph database, and it also has the lowest accuracy score of the Mem0 variants. Zep's efficient concurrent searches demonstrate strong performance for production-grade AI intelligences that require more complex memory structures and are looking for responsiveness, and Zep explains that its latency data was measured in an AWS us-west-2 environment with a NAT setup for transmission.

LoCoMo benchmarking limitations raise questions

Mem0's decision to choose LoCoMo as the benchmark for its research was itself scrutinized by Zep, who identified several fundamental flaws in the benchmark at both the design and execution levels. Designing and executing a comprehensive and unbiased benchmark is a difficult task in itself, requiring in-depth expertise, adequate resources, and a thorough understanding of the internal mechanisms of the system under test.

Key issues with LoCoMo identified by the Zep team include:

- Insufficient dialog length and complexity:LoCoMo 中的对话平均长度在 16,000 到 26,000 Tokens 之间。虽然这看起来很长,但对于现代 LLM 而言,这通常在其上下文窗口能力范围之内。这种长度未能真正对长期记忆检索能力构成压力。一个有力的证据是,Mem0 自己的结果显示,其系统的表现甚至不如一个简单的“全上下文基线”(即将整个对话内容直接输入 LLM)。全上下文基线的 J 分数约为 73%,而 Mem0 的最佳分数约为 68%。如果简单提供所有文本就能获得比专业记忆系统更好的结果,那么该基准测试就未能充分考察真实世界 AI 智能体交互中对记忆能力的严苛要求。

- Failure to test critical memory functions: The benchmark lacks the "knowledge updating" problem it was designed to test. Memory updating after information changes over time (e.g., when a user changes jobs) is a critical feature for AI memory.

- Data quality issues: The dataset itself suffers from several quality flaws:

- Category of unavailability: Category 5 could not be used due to the lack of standardized answers, forcing both Mem0 and Zep to exclude this category from their evaluations.

- multimodal error: Some of the questions asked about images, but the necessary information did not appear in the image descriptions generated by the BLIP model during the dataset creation process.

- Misattribution of speakers: Some questions incorrectly attribute behaviors or statements to the wrong speaker.

- Lack of clarity in the definition of the problem: Some questions are ambiguous and may have more than one potentially correct answer (e.g., asking when someone goes camping when that person may have been there in both July and August).

Given these errors and inconsistencies, the reliability of LoCoMo as an authoritative measure of the memory performance of AI intelligences is questionable. Unfortunately, LoCoMo is not an isolated case. Several other benchmarks, such as HotPotQA, have been criticized for using LLM training data (e.g., Wikipedia), oversimplified questions, and factual errors. This highlights the ongoing challenge of conducting robust benchmarking in AI.

Mem0 Criticism of Zep's assessment methodology

In addition to the controversy surrounding the LoCoMo benchmark itself, the comparison of Zep in the Mem0 paper is, according to Zep, based on a flawed implementation and thus fails to accurately reflect Zep's true capabilities:

- Wrong user model: Mem0 uses a user graph structure designed for a single user-assistant interaction, but assigns user roles to the dialog'sboth parties involvedParticipants. This likely obfuscated Zep's internal logic, causing it to view conversations as a single user constantly switching identities between messages.

- Improper timestamp handling: Timestamps are passed by appending them to the end of the message, rather than using the Zep-specific created_at field. This non-standard approach interferes with Zep's timing inference capabilities.

- Sequential vs. parallel search: The search operation is executed sequentially rather than in parallel, which artificially inflates Zep's search latency as reported by Mem0.

Zep argues that these implementation errors fundamentally misinterpreted how Zep was designed to operate and inevitably led to the poor performance reported in the Mem0 paper.

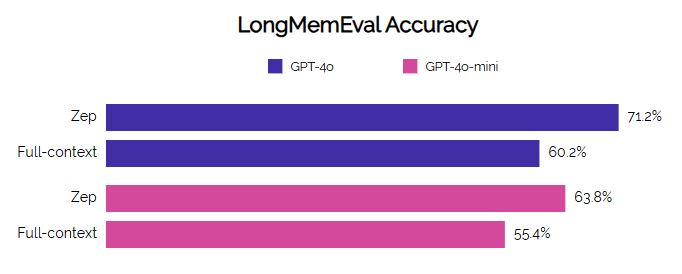

The Industry Calls for Better Benchmarking: Why Zep Favors LongMemEval

The controversy over LoCoMo has reinforced the industry's need for more robust and relevant benchmarking, and the Zep team has expressed a preference for benchmarks like the LongMemEval Such an evaluation criterion, as it compensates for LoCoMo's shortcomings in several ways:

- Length and Challenge: Contains significantly longer conversations (115k Tokens on average) that really test the contextual limits.

- Temporal Reasoning and State Change: Explicitly tests understanding of time and the ability to deal with changes in information (updating of knowledge).

- mass (in physics): Planned and designed by hand with the aim of ensuring high quality.

- Corporate Relevance: More representative of the complexity and requirements of real-world enterprise applications.

Zep reportedly demonstrated strong performance on LongMemEval, achieving significant improvements in both accuracy and latency compared to the baseline, especially on complex tasks such as multi-session synthesis and temporal inference.

Benchmarking is a complex endeavor, and evaluating competitor products requires even more diligence and expertise to ensure fair and accurate comparisons. From the detailed rebuttal presented by Zep, it appears that Mem0's claimed SOTA performance is based on a flawed benchmark (LoCoMo) and a faulty implementation of a competitor's system (Zep).

When properly evaluated under the same benchmark, Zep significantly outperforms Mem0 in terms of accuracy and shows high competitiveness in terms of search latency, especially when comparing graph-based implementations. This difference highlights the critical importance of a rigorous experimental design and a deep understanding of the system being evaluated to draw credible conclusions.

Going forward, the AI field desperately needs better and more representative benchmarking. Industry observers are also encouraging Mem0 teams to evaluate their products on more challenging and relevant benchmarks such as LongMemEval, where Zep has already published its results, in order to make more meaningful side-by-side comparisons of the long-term memory capabilities of AI intelligences. It's not just about the reputation of individual products, it's about the right direction of technological progress for the industry as a whole.

Reference:ZEP: A Temporal Knowledge Graph Architecture for Intelligent Body Memory

© Copyright notes

The copyright of the article belongs to the author, please do not reprint without permission.

Related posts

No comments...