fal: generative macromodeling API for developers of rich media classes

General Introduction

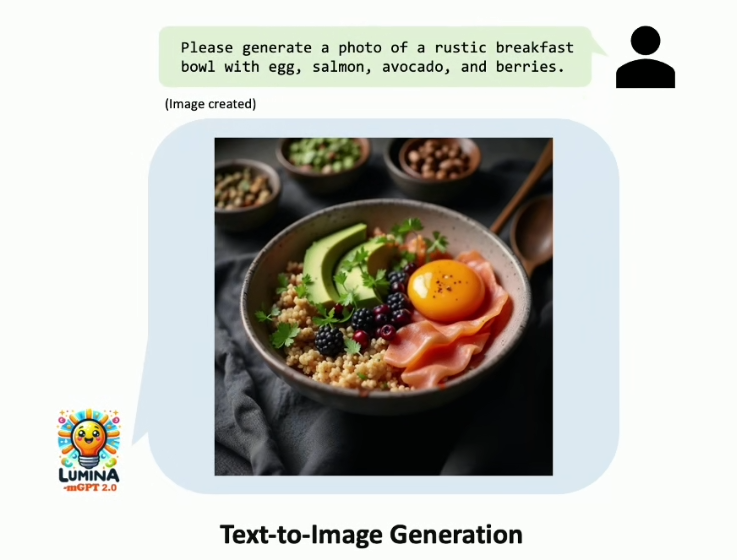

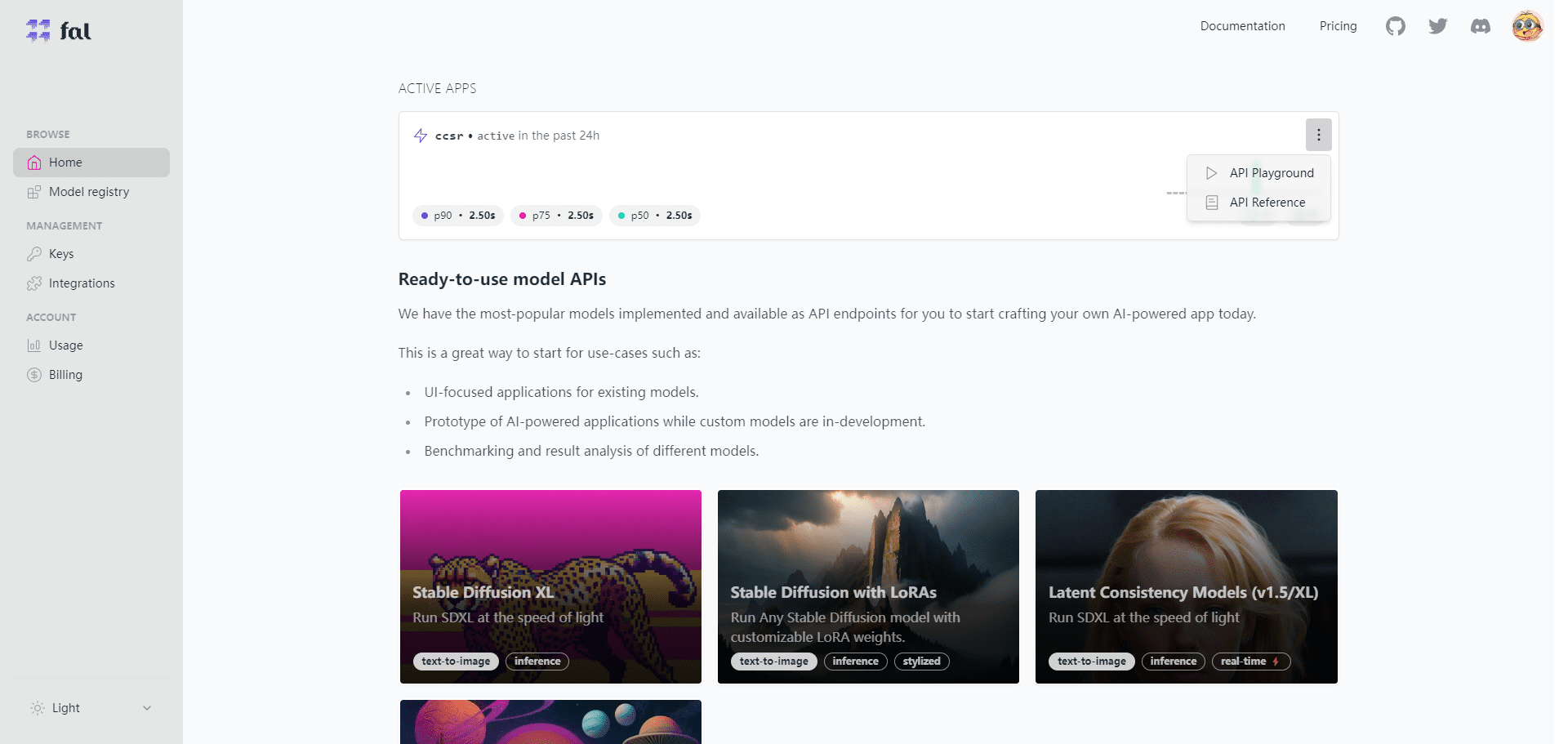

fal is an online AI inference platform that helps users build real-time AI applications with high-quality generative media models, including images, video, and audio. No cold start required, pay-as-you-go. fal provides a variety of pre-trained generative models such as Stable Diffusion XL, Stable Diffusion with LoRAs, Optimized Latent Consistency (SDv1.5), etc., which allow users to use simple text descriptions and doodle sketches to quickly generate images.

fal also supports users to upload customized models or use shared models with fine-grained control and automatic capacity expansion and contraction. fal supports multiple machine types and specifications, such as GPU-A100, GPU-A10G, GPU-T4, etc., which can satisfy different performance and cost requirements. fal has detailed documentation and examples, which can help users to get started and use it quickly.

Powered by its proprietary fal inference engine, the platform is capable of running diffusion models up to 4x faster than other alternatives, enabling new real-time AI experiences. fal.ai, founded in 2021 and headquartered in San Francisco, is dedicated to lowering the barriers to creative expression by optimizing the speed and efficiency of inference.

Function List

- Efficient inference engine: Provides the world's fastest diffusion model inference engine, with inference speeds up to 400%.

- Multiple generation models: Supports a variety of pre-trained generative models such as Stable Diffusion 3.5 and FLUX.1.

- LoRA training: Provides the industry's best LoRA training tools, with the ability to personalize or train a new style in less than 5 minutes.

- API Integration: A variety of client-side libraries such as JavaScript, Python, and Swift are available for easy integration by developers.

- real time inference: Supports real-time generation of media inference for real-time creative tools and camera input.

- Cost optimization: Pay-per-use to ensure cost-effective calculations.

Using Help

Installation and Integration

- register an account: Visit fal.ai and sign up for a developer account.

- Getting the API key: After logging in, generate and obtain your API key on the "API Key" page.

- Installing client libraries::

- JavaScript::

import { fal } from "@fal-ai/client"; const result = await fal.subscribe("fal-ai/fast-sdxl", { input: { prompt: "photo of a cat wearing a kimono" }, logs: true, onQueueUpdate: (update) => { if (update.status === "IN_PROGRESS") { update.logs.map((log) => log.message).forEach(console.log); } }, }); - Python::

from fal import Client client = Client(api_key="YOUR_API_KEY") result = client.subscribe("fal-ai/fast-sdxl", input={"prompt": "photo of a cat wearing a kimono"}) print(result) - Swift::

import FalAI let client = FalClient(apiKey: "YOUR_API_KEY") client.subscribe(model: "fal-ai/fast-sdxl", input: ["prompt": "photo of a cat wearing a kimono"]) { result in print(result) }

- JavaScript::

Using Generative Models

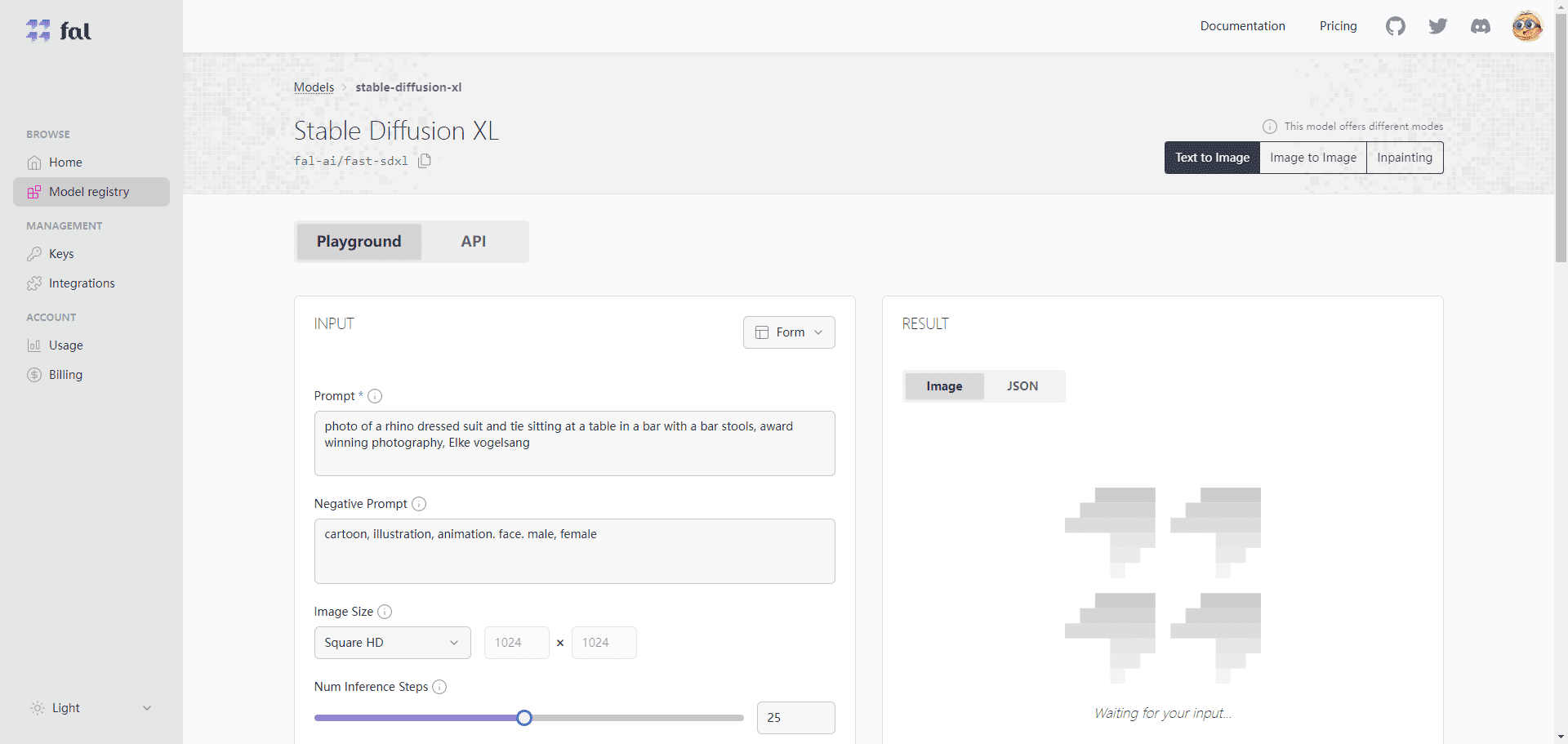

- Select Model: Select the appropriate model for your project from fal.ai's model library, such as Stable Diffusion 3.5 or FLUX.1.

- Configuration parameters: Configure model parameters, such as the number of inference steps, input image size, etc., according to project requirements.

- running inference: Use API calls to run inference and get generated media content.

- Optimization and Adjustment: Based on the generated results, adjust the parameters or select a different model for optimization.

LoRA training

- Upload data: Prepare the training data and upload it to the fal.ai platform.

- Selecting Training Models: Select a suitable LoRA training model such as FLUX.1.

- Configuring Training Parameters: Set training parameters such as learning rate, number of training steps, etc.

- Start training: Start the training process and the platform will complete the training and generate a new style model in a short period of time.

- Applying the new model: Inference using newly trained models to generate personalized media content.

All models are divided into debugging interface and API two parts, you can use in the debugging interface no problem in the call API:

fal optional model

| Model name | Model Introduction | Model Category | Detailed description |

| Stable Diffusion with LoRAs | Run any stable diffusion model and customize LoRA weights | text-to-image | LoRA is a technique used to enhance the quality and diversity of an image by adjusting different weights to control the style and detail of the resulting image |

| Stable Diffusion XL | Running SDXL at the speed of light | text-to-image | SDXL is a diffusion model-based image generation method that generates high-quality images in few inference steps and is faster and more stable than traditional GAN methods |

| Stable Cascade | Image generation on smaller and cheaper potential spaces | text-to-image | Stable Cascade is an image generation method that utilizes multiple layers of latent space to generate high-resolution images at low computational cost for mobile devices and edge computing |

| Creative Upscaler | Creating Creative Enlarged Images | image-to-image | Creative Upscaler is a method used for image enlargement to add creative elements such as textures, colors, shapes, etc., while maintaining the clarity of the image. |

| CCSR Upscaler | State-of-the-art image amplifiers | image-to-image | CCSR Upscaler is a deep learning-based image enlargement method that can enlarge an image to four or more times its original resolution without introducing blur and distortion |

| PhotoMaker | Customize realistic character photos by stacking ID embeds | image-to-image | PhotoMaker is a method for generating character photos that allows the user to control the appearance, expression, pose, background, etc. of the character by adjusting different ID embeddings to generate realistic character photos |

| Whisper | Whisper is a model for speech transcription and translation | speech-to-text | Whisper is an end-to-end Transformer-based speech recognition and translation model that converts speech to text in different languages in a single step, supporting multiple languages and dialects |

| Latent Consistency (SDXL & SDv1.5) | Generate high quality images with minimal inference steps | text-to-image | Latent Consistency is a technique used to improve the efficiency and quality of image generation by producing high quality images in fewer inference steps while maintaining latent spatial consistency and interpretability |

| Optimized Latent Consistency (SDv1.5) | Generates high quality images with minimal inference steps. Optimized for 512×512 input image size | image-to-image | Optimized Latent Consistency is an image generation method that is optimized for a specific input image size to produce high quality images in fewer inference steps while maintaining latent space consistency and interpretability |

| Fooocus | Use default parameters for automatic optimization and quality improvement | text-to-image | Fooocus is a method for generating images that allows the user to generate high quality images without adjusting any parameters, while using automatic optimization and quality improvement techniques to enhance the generated results |

| InstantID | Identity preserving generation with zero samples | image-to-image | InstantID is a method for generating identity-preserving images that allows users to generate images with the same identity as the original image without any training data, but with the ability to change other attributes such as hairstyle, clothing, background, etc. |

| AnimateDiff | Animate your ideas with AnimateDiff! | text-to-video | AnimateDiff is a method for generating animations that allows users to generate short video clips by entering a text description, supporting a variety of styles and themes, such as cartoon, realistic, abstract, and more! |

| AnimateDiff Video to Video | Add Style to Your Videos with AnimateDiff | video-to-video | AnimateDiff Video to Video is a method for video style conversion that allows users to generate a new video by entering a video and a style description, supporting a wide range of styles and themes, such as cartoon, realistic, abstract and more! |

| MetaVoice | MetaVoice-1B is a 1.2 billion parameter base model for TTS (text-to-speech), trained on 100,000 hours of speech | text-to-speech | MetaVoice is a method for generating speech that allows users to enter text to generate speech in different languages and sounds, supporting multiple languages and dialects, as well as a wide range of vocal characteristics, such as pitch, rhythm, emotion, etc. |

| MusicGen | Create high-quality music with text descriptions or melodic cues | text-to-audio | MusicGen is a method for generating music that allows the user to generate music in different styles and themes by entering textual descriptions or melodic cues, supporting a wide range of instruments and timbres, as well as a variety of musical features, such as beats, chords, melody, and more! |

| Illusion Diffusion | Creating Illusions from Images | text-to-image | Illusion Diffusion is a method for generating illusions that allows the user to generate new images by inputting an image and a description of the illusion, supporting multiple types of illusions such as visual, auditory, tactile, etc. |

| Stable Diffusion XL Image to Image | Run SDXL image-to-image at the speed of light | image-to-image | Stable Diffusion XL Image to Image is an image-to-image method that allows the user to generate a new image from an input image, supporting a wide range of image-to-image tasks such as style conversion, super-resolution, image restoration and more! |

| Comfy Workflow Executor | Executing Comfy workflows in fal | json-to-image | Comfy Workflow Executor is a method for executing Comfy workflows that allows users to generate images by inputting workflows in JSON format, supporting a variety of workflow components such as data, models, operations, outputs, etc. |

| Segment Anything Model | SAM model | image-to-image | Segment Anything Model is a method for image segmentation that allows the user to generate a segmentation graph by inputting an image, and supports a variety of image segmentation tasks, such as semantic segmentation, instance segmentation, face segmentation, and so on. |

| TinySAM | Distilled Segment Anything Model TinySAM | image-to-image | TinySAM is a method for image segmentation that is a distilled version of the Segment Anything Model, which can achieve similar segmentation results to the original model with smaller model sizes and faster inference speeds |

| Midas Depth Estimation | Creating depth maps using Midas depth estimation | image-to-image | Midas Depth Estimation is a method for generating depth maps that allows the user to generate depth maps from an input image, with support for a variety of depth map formats, such as grayscale, color, pseudo-color, etc. |

| Remove Background | Remove background from image | image-to-image | Remove Background is a method for removing the background of an image, allowing the user to generate a background-removed image by inputting an image, supporting a wide range of background types, such as natural landscapes, indoor scenes, complex objects, etc. |

| Upscale Images | Enlarge the image by a given factor | image-to-image | Upscale Images is a method for image enlargement that allows the user to generate a new image by inputting an image and a zoom factor, and supports a variety of image formats, such as JPG, PNG, BMP, etc. |

| ControlNet SDXL | Image generation using ControlNet | image-to-image | ControlNet SDXL is a method for generating images that allows the user to generate new images by inputting an image and control vectors, with support for many types of control vectors, such as style, color, shape, etc. |

| Inpainting sdxl and sd | Repairing Images with SD and SDXL | image-to-image | Inpainting sdxl and sd is a method for image restoration that allows the user to generate a restored image by inputting an image and a mask, supporting a variety of image restoration tasks such as removing watermarks, filling in gaps, eliminating noise, etc. |

| Animatediff LCM | Animate your text with a latent consistency model | text-to-image | Animatediff LCM is a method for generating animations that allows users to generate short video clips by inputting text and frames, with support for a variety of latent consistency models, such as SDXL, SDv1.5, SDv1.0, etc. |

| Animatediff SparseCtrl LCM | Animating your drawings with latent consistency models | text-to-video | Animatediff SparseCtrl LCM is a method for generating animations that allows the user to generate short video clips by inputting drawings and frame counts, with support for a variety of latent consistency models such as SDXL, SDv1.5, SDv1.0, and more! |

| Controlled Stable Video Diffusion | Generate short video clips from your images | image-to-image | Controlled Stable Video Diffusion is a method for generating videos that allows users to generate short video clips by inputting images and control vectors, supporting multiple types of control vectors, such as motion, angle, speed, etc. |

| Magic Animate | Generate short video clips from motion sequences | image-to-image | Magic Animate is a method for generating videos that allows users to generate short video clips by inputting images and motion sequences, supporting a variety of motion sequence formats, such as text, icons, gestures, and more! |

| Swap Face | Swap faces between two images | image-to-image | Swap Face is a method for swapping faces that allows the user to generate a new image by inputting two images, and supports a wide range of image types, such as people, animals, cartoons, etc. |

| IP Adapter Face ID | High-quality zero-sample personalization | image-to-image | IP Adapter Face ID is a method for generating personalized images that allows users to generate new images by entering an image and a personalized description, supporting a variety of personalization types, such as hairstyles, clothing, backgrounds, and more! |

© Copyright notes

The copyright of the article belongs to the author, please do not reprint without permission.

Related posts

No comments...