LocalAI: open source local AI deployment solutions, support for multiple model architectures, WebUI unified management of models and APIs

General Introduction

LocalAI is an open source local AI alternative that aims to provide API interfaces compatible with OpenAI, Claude, and others. It supports running on consumer-grade hardware, does not require a GPU, and is capable of performing a wide range of tasks such as text, audio, video, image generation, and speech cloning.LocalAI was created and is maintained by Ettore Di Giacinto, and supports a wide range of model architectures including gguf, transformers, diffusers, and more for local or on-premise deployments.

Function List

- Text Generation: Supports the GPT series of models, capable of generating high-quality text content.

- Audio Generation: Generate natural and smooth voice through text-to-audio function.

- Image generation: high quality images are generated using a stabilized diffusion model.

- Speech Cloning: Generate speech that is similar to the original voice through speech cloning technology.

- Distributed reasoning: supports P2P reasoning to improve model reasoning efficiency.

- Model Download: Download and run models directly from platforms such as Huggingface.

- Integrated WebUI: Provides an integrated web user interface for user-friendly operation.

- Vector Database Embedding Generation: Support vector database embedding generation.

- Constrained Syntax: Support for generating text content with constrained syntax.

- Vision API: Provides image processing and analysis functions.

- Reordering API: supports reordering and optimization of text content.

Installation process

- Using Installation Scripts::

- Run the following command to download and install LocalAI:

curl -s https://localai.io/install.sh | sh

- Run the following command to download and install LocalAI:

- Using Docker::

- If there is no GPU, run the following command to start LocalAI:

docker run -ti --name local-ai -p 8080:8080 localai/localai:latest-aio-cpu - If you have an Nvidia GPU, run the following command:

docker run -ti --name local-ai -p 8080:8080 --gpus all localai/localai:latest-aio-gpu-nvidia-cuda-12

- If there is no GPU, run the following command to start LocalAI:

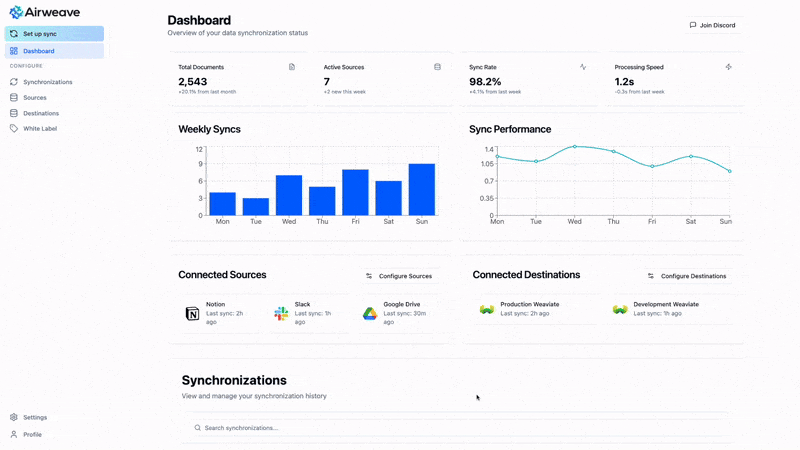

Usage Process

- Start LocalAI::

- After starting LocalAI through the installation process described above, access the

http://localhost:8080Go to the WebUI.

- After starting LocalAI through the installation process described above, access the

- Loading Models::

- In the WebUI, navigate to the Models tab and select and load the desired model.

- Alternatively, use the command line to load the model, for example:

local-ai run llama-3.2-1b-instruct:q4_k_m

- Generate content::

- In the WebUI, select the appropriate function module (e.g., text generation, image generation, etc.), enter the required parameters, and click the Generate button.

- For example, to generate text, enter the prompt, select the model, and click the "Generate Text" button.

- distributed inference::

- Configure multiple LocalAI instances to realize distributed P2P inference and improve inference efficiency.

- Refer to the Distributed Inference Configuration Guide in the official documentation.

Advanced Features

- Custom Models::

- Users can download and load custom models from Huggingface or the OCI registry to meet specific needs.

- API integration::

- LocalAI provides a REST API compatible with the OpenAI API for developers to easily integrate into existing applications.

- Refer to the official API documentation for detailed API usage.

© Copyright notes

The copyright of the article belongs to the author, please do not reprint without permission.

Related posts

No comments...