Introduction to the OpenAI o1-mini Large Model

Promoting cost-effective reasoning techniques.

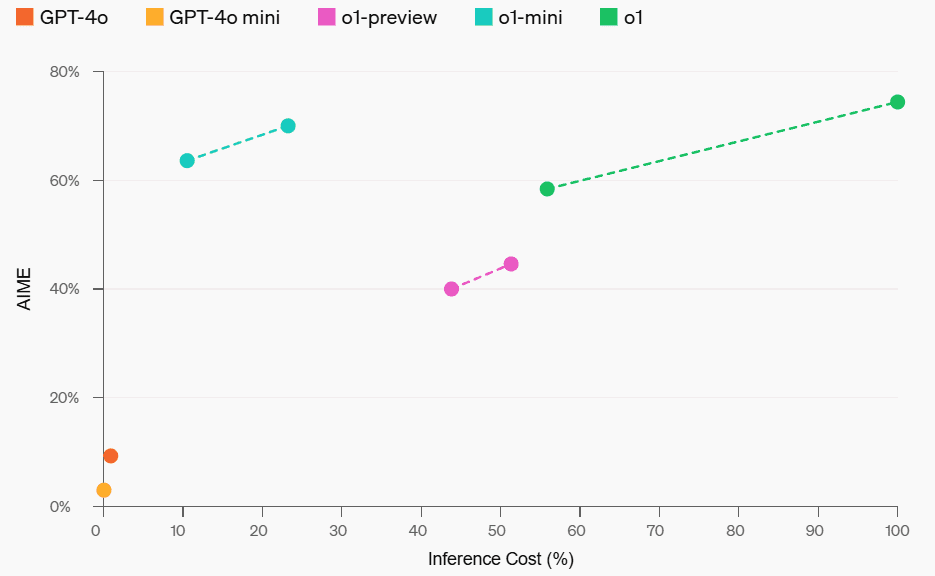

We have introduced OpenAI o1-mini, a cost-effective inference model. o1-mini excels in STEM, especially in mathematics and programming, with performance nearly as good as that of the OpenAI o1 comparable performance on review benchmarks such as AIME and Codeforces. We anticipate that o1-mini will become a faster and more affordable option for application scenarios that require reasoning but do not rely on extensive world knowledge.

Today, we're offering OpenAI o1-preview for 80% cheaper than OpenAI o1-preview. tier 5 API users (opens in a new window) Launch of o1-mini. ChatGPT Plus, Team, Enterprise and Edu users can use o1-mini as an alternative to o1-preview, enjoying higher usage limits and lower latency, see [Model Speed.

Optimized for STEM reasoning

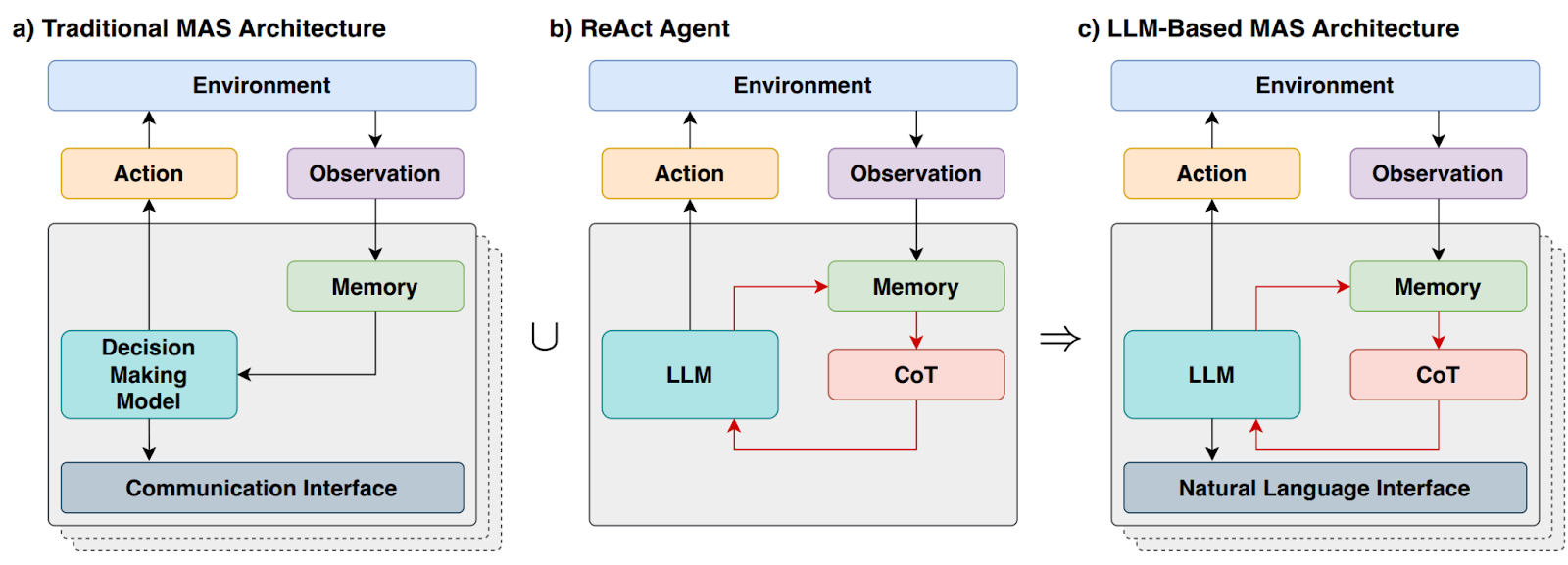

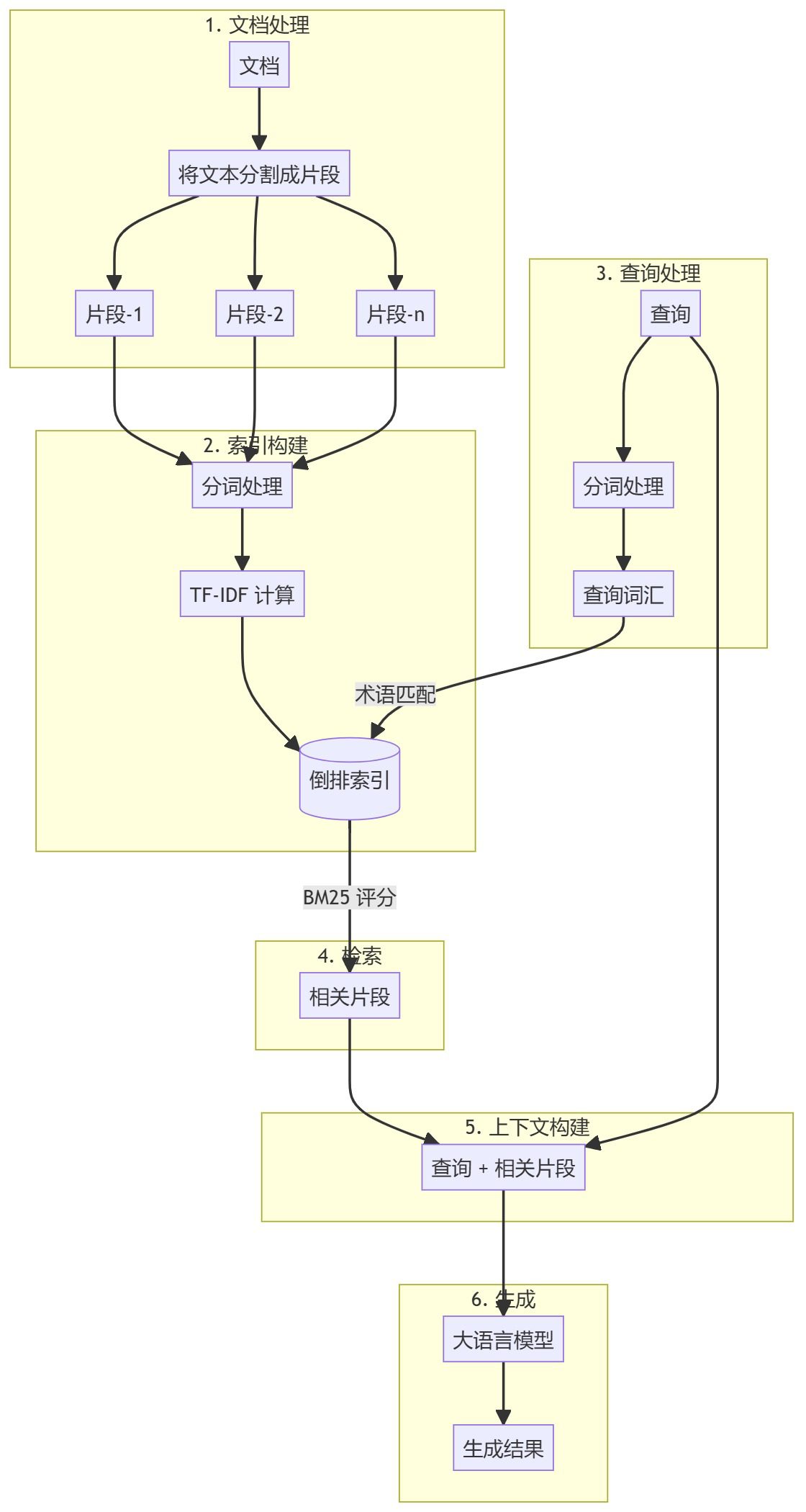

Large language models such as o1 are usually pre-trained on large-scale textual datasets. Despite their extensive world knowledge, these high-capacity models can be expensive and slow in practice. In contrast, o1-mini is a small model specifically optimized for STEM reasoning during the pre-training phase. After being trained using the same high-computational-volume reinforcement learning (RL) pipeline as o1, o1-mini performs comparably on many practical reasoning tasks while being significantly more cost-effective.

In benchmark tests requiring intelligence and reasoning, o1-mini outperforms o1-preview and o1. However, o1-mini performs poorly in tasks requiring non-STEM factual knowledge, see [Limitations].

Mathematical Performance and Reasoning Costs

Math: In the high school AIME math competition, the o1-mini (70.0%) performed comparably to the o1 (74.4%)-and at a significantly cheaper price-and better than the o1-preview ( 44.6%). o1-mini's score (about 15 out of 11 questions answered correctly) puts it roughly in the top 500 or so of U.S. high school students.

Programming: On the Codeforces competition website, o1-mini has an Elo score of 1650, which is comparable to o1 (1673) and higher than o1-preview (1258). This Elo score places o1-mini in the top 86th percentile of programmers on the Codeforces platform. o1-mini also performed very well on the HumanEval programming benchmarks and the high school level Cybersecurity Capture the Flag Challenges (CTFs).

Codeforces

HumanEval

Cybersecurity CTFs

STEM: On some academic tests that require reasoning, such as the GPQA (science) and the MATH-500, o1-mini outperforms the GPT-4o. o1-mini does not perform as well as the GPT-4o on the MMLU task and lags behind o1-preview on the GPQA because it lacks extensive world knowledge.

MMLU

GPQA

MATH-500

Human preference assessment: We asked evaluators to compare o1-mini to GPT-4o on open-ended puzzles in a variety of domains, using the same methodology as we did in [o1-preview compared to GPT-4o](https://openai.com/index/learning-to-reason-with-llms/). Similar to o1-preview, o1-mini is more popular than GPT-4o in domains requiring reasoning, but less popular than GPT-4o in language-focused domains.

Human preference assessment vs chatgpt-4o-latest

model speed

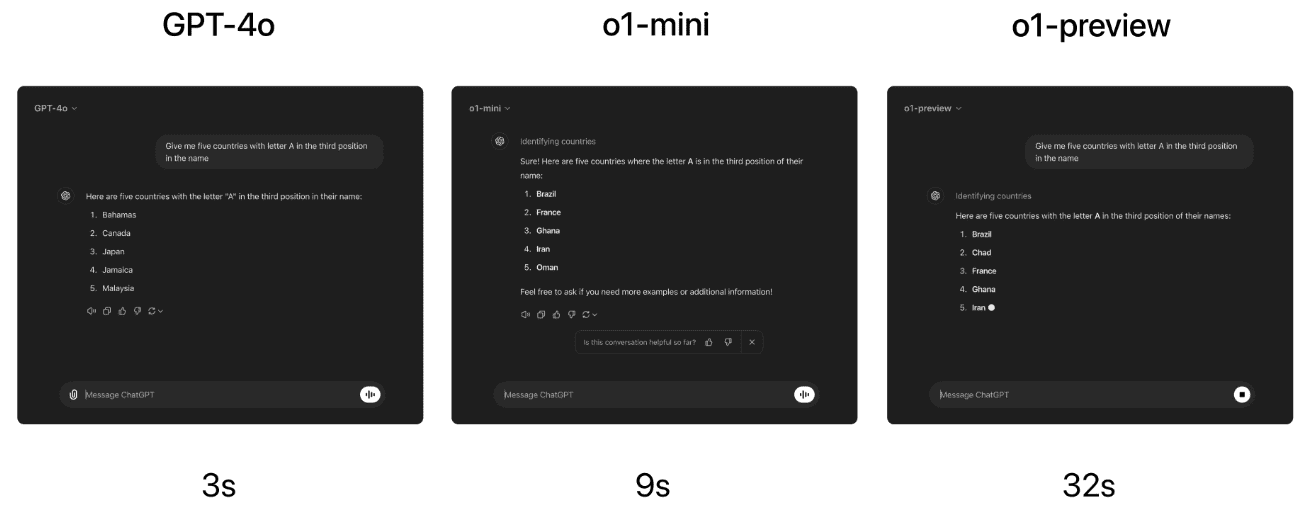

As a concrete example, we compare the responses of GPT-4o, o1-mini, and o1-preview on a lexical reasoning problem. While GPT-4o gave an incorrect answer, both o1-mini and o1-preview gave correct answers, and o1-mini answered about 3-5 times faster.

© Copyright notes

The copyright of the article belongs to the author, please do not reprint without permission.

Related posts

No comments...